Technion CS Lambda HPC Cluster is a system that bolsters class education , training and research in high performance computing (HPC) to undergraduate students.

Highlights:

- 4 Supermicro powerful servers

- 128 Intel CPU E5-2683 v4 Cores

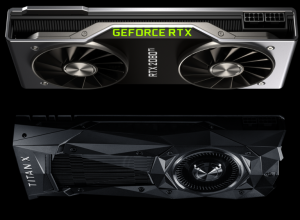

- 32 NVIDIA GPU (4 x GTX 1080Ti(11GB); 6x RTX 2080(8GB); 18 x RTX 2080Ti(11GB); 8 x TITAN Xp(12GB))

To open course account on Lambda cluster send please list of students Technion mail accounts to admlab@cs.technion.ac.il

Users can request accounts for Lambda cluster by filling out an account request form. This can be found by following this link: account request form.